Evaluation Metrics

[!question]- Give some metrics for unranked search

[!question]- why is accuracy a terrible metric to evaluate IR systems

[!question]- What happens to recall when the number of docs retrieved increases?

[!question]- What happens to precision when recall increases?

[!question]- What is the F measure

[!question]- What is R precision Suppose we have 15 relevant document. We know this number. Then calculate the precision for the first 15 docs retrieved

[!question]- What is the use of Kappa measure Some time two judges can, out of chance, agree on something. We want to eliminate this small chance. So we take the kappa measure to gauge the real agreement between them

[!question]- What is the formula for kappa measure $\displaystyle \frac{P(A) - P(E)}{1 - P(E)}$ Where $P(E)$ is the agreement by chance $P(A)$ is the probability of agreeing

Procedure to draw 11-point precision-recall curve

- Given

- Total number of relevant documents

- A set of results labelled whether they are relevant or not

- Example:

- N = 15

- D1,D2,D3,D4,D5,D6,D7,D8,D9,D10

- Find precision and recall for each subset of this result

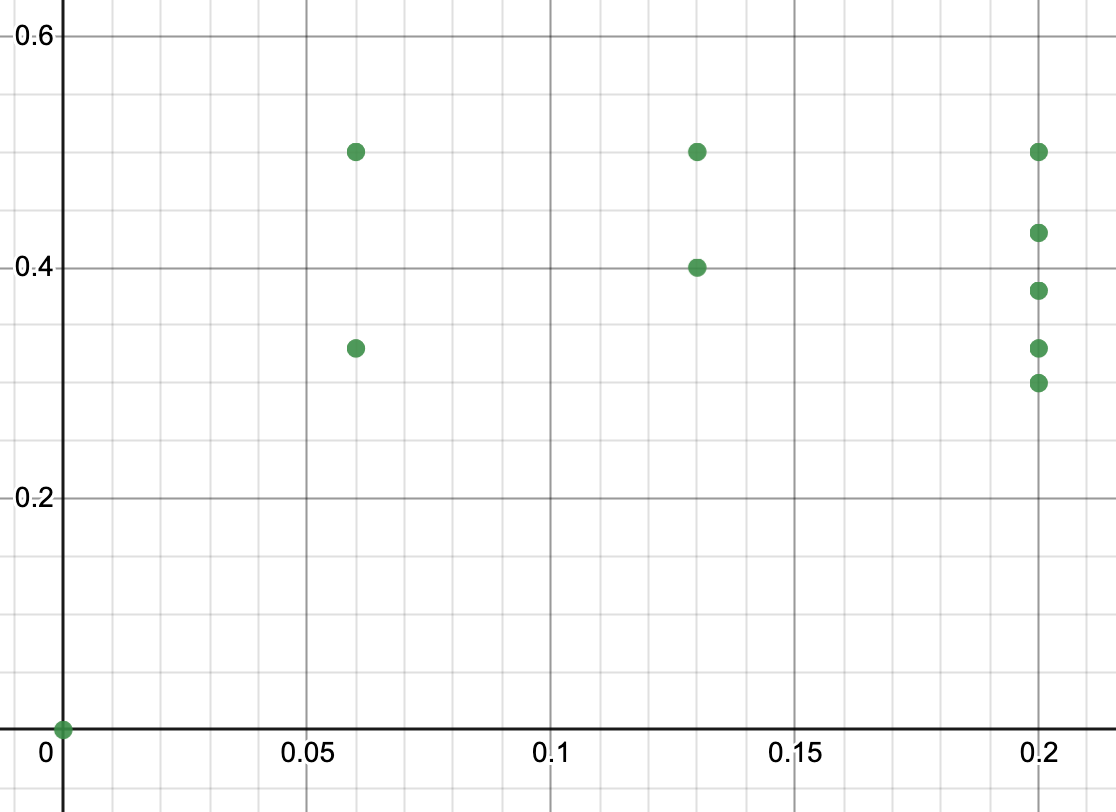

| Subset | Precision | Recall |

|---|---|---|

| D1 | 0 | 0 |

| D1,D2 | 0.50 | 0.06 |

| D1,D2,D3 | 0.33 | 0.06 |

| D1,D2,D3,D4 | 0.50 | 0.13 |

| D1,D2,D3,D4,D5 | 0.40 | 0.13 |

| D1,D2,D3,D4,D5,D6 | 0.50 | 0.20 |

| D1,D2,D3,D4,D5,D6,D7 | 0.43 | 0.20 |

| D1,D2,D3,D4,D5,D6,D7,D8 | 0.38 | 0.20 |

| D1,D2,D3,D4,D5,D6,D7,D8,D9 | 0.33 | 0.20 |

| D1,D2,D3,D4,D5,D6,D7,D8,D9,D10 | 0.30 | 0.20 |

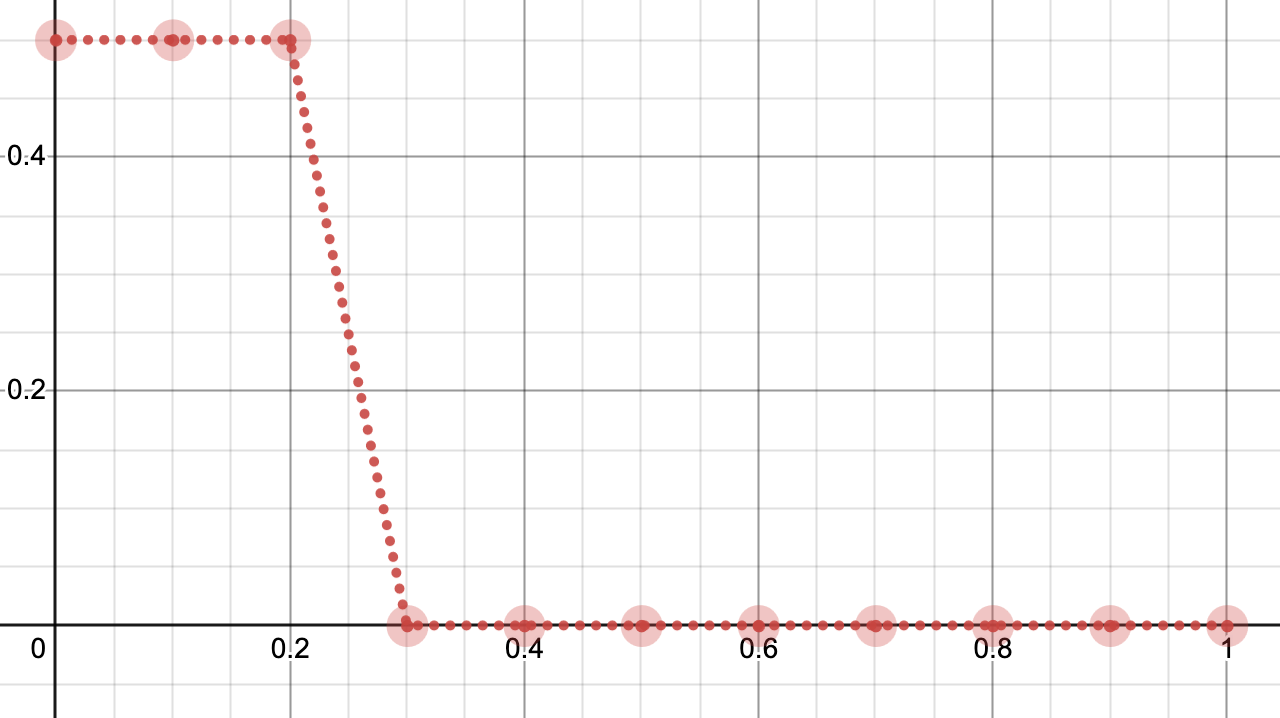

- But we want the precision at each of these points of recall {0, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9, 1.0}

- How do we interpolate this?

- At each recall point look to the right of the graph and find the greatest precision. That is the precision for that point

| Recall | Precision |

|---|---|

| 0 | 0.5 |

| 0.1 | 0.5 |

| 0.2 | 0.5 |

| 0.3 | 0 |

| 0.4 | 0 |

| 0.5 | 0 |

| 0.6 | 0 |

| 0.7 | 0 |

| 0.8 | 0 |

| 0.9 | 0 |

| 1.0 | 0 |

MAP

- Similar procedure as before but there is no interpolation

- Calculate the precision every time a relevant doc is retrieved.

- So for the last example we would calculate it at D2, D4, D6

- 0.5, 0.5, 0.5

- We take the average of these values

- 0.5

- Then we repeat the same for all other queries

- At the end we would have something like this

| Query | Avg. Precision |

|---|---|

| Q1 | 0.53 |

| Q2 | 0.49 |

| Q3 | 0.69 |

| … | … |

| QN | 0.42 |

- Then we take the average of these averages. That is called as MAP

Kappa measure

$P(A) = tp + tn / total$ $P(E) = P(P_1)*P(P_2) + P(N_1)*P(N_2)$

Where $P(P_1)$ is the probability that judge 1 categorizes a sample as positive

Where $P(P_2)$ is the probability that judge 2 categorizes a sample as positive

Where $P(N_1)$ is the probability that judge 1 categorizes a sample as negative

Where $P(N_2)$ is the probability that judge 2 categorizes a sample as negative